Metadata Manager and Experiment

Dataset Location

First Metadata Manager needs to be configured with some parameters. Next parameters are mandatory:

- dataFolderPattern: pattern that will be replaced by the values from parameters to determine the dataset location. For instance: {dataRoot}/{proposal}/{beamlineID}/{sampleName}/{scanName}

- globalHDFfiles: patterns that will be replaced by the values from parameters to determine the location where global HDF5 file will be stored. For instance: {dataRoot}/{proposal}/{beamlineID}/{proposal}-{beamlineID}.h5

- beamlineId: name of the beamline. For instance: id30a3, bm31

If a datasetParentFolder is specified will be used to determine the final dataset location otherwise the dataFolderPattern will be used:

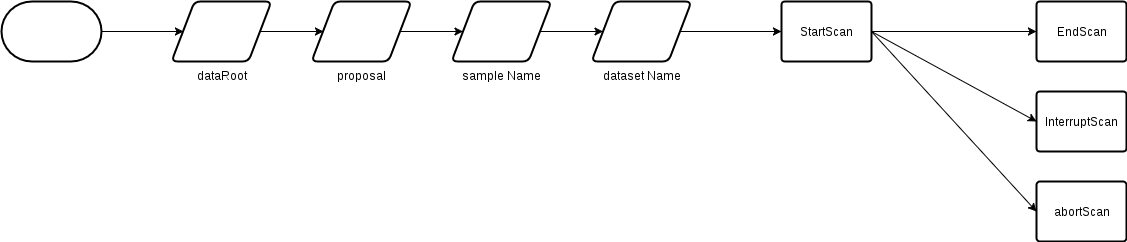

DataFolderPattern

This flow chart explains all steps needed to store a dataset.

In the previous example the final dataset folder will be determined by the dataFolderPattern.

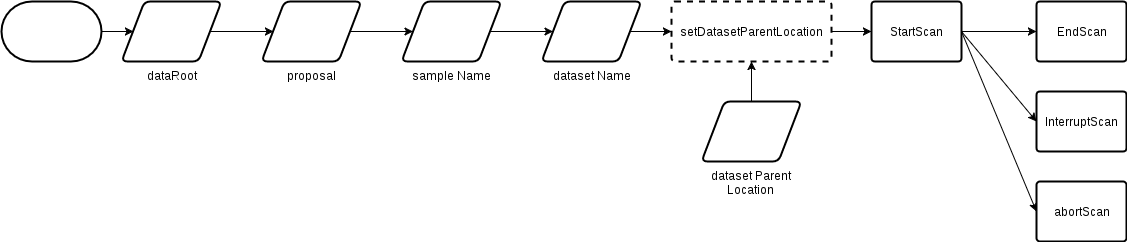

Specific dataset Location

It is possible to force the dataset location by using the method setDatasetParentLocation. This method receives a String as parameter with the folder of the dataset's parent.

Example:

metadataManager.setDatasetParentLocation("/data/visitor/MA1234/id01/12042018")In this example the final dataset's folder will be /data/visitor/MA1234/id01/12042018/{scanName}

In order to implement such functionality 3 new methods have been implemented:

-

setDatasetParentLocation is optional and can be composed by: A pattern which keys will be replaced. For instance: {dataRoot}/{proposal}/Jonh/{sampleName}/20180418 An absolute path: /data/visitor/MA1234/id01/Johh/12042018

-

getDatasetParentLocation returns the value of datasetParentLocation

-

clearDatasetParentLocation sets the value of datasetParentLocation to null then dataFolderPatter will be used instead.

In both cases these folder locations point to the parent folder of the dataset

Requirements

MetadataManager has got as optional dependency graypy (https://pypi.python.org/pypi/graypy) and requests

Requests is a requirement for sending notifications to the elogbook and graypy is required to use Graylog

They can be installed:

easy_install graypy

easy_install requestsor

pip install -U graypy

pip install requestsDebian 6

Recommended solution is:

First try to use requests as supplied by debian. If you run into issues, then try to install the packages downloaded (they install a much newer version).

The module graypy can be easily installed in any case by downloading the source package (pip install or python setup.py whatever works).

Configuration

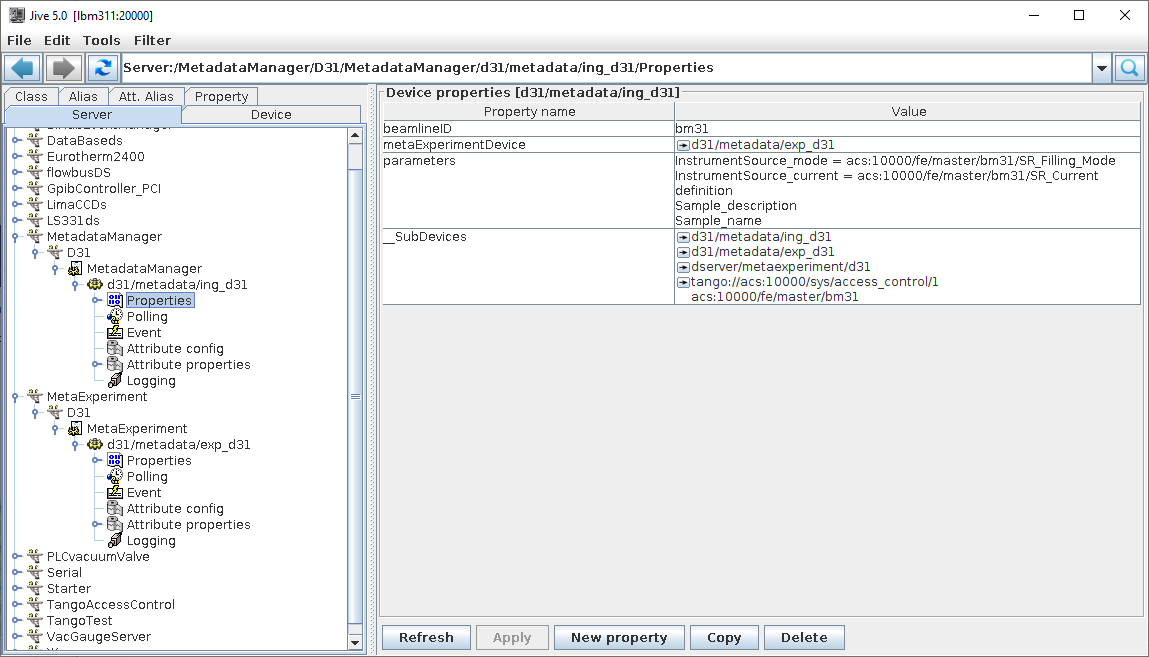

Tango

Using automatic tool to generate tango properties

There is a script that can be found in /scripts/GenerateMetaDataResource folder that generates the right starting configuration for a beamline. Usage:

Usage: GenerateMetaDataResources.py -b BL_NAME [options]

MetaData TANGO DEVICE SERVER RESOURCES GENERATOR

Options:

-h, --help show this help message and exit

-b BL_NAME, --beamline=BL_NAME

the beam line name

-m MEMBER_NAME, --member_name=MEMBER_NAME

the member part of the three part device

(domain/family/member)

-p PERSONAL_NAME, --personal_name=PERSONAL_NAME

the personal name of the Device Server

Example:

GenerateMetaDataResources.py -b id99The use in Jive -> File/Load property file to load the generated file.

Example of generated files:

MetadataManager_ID99.tango

MetaExperiment_ID99.tangoICAT Reader

From version > release-v1.63 MetadataManager has got a new function called CheckParameters. This function compares the list of parameters with the ICAT database and will display a message if there are parameters not support on the currect ICAT configuration.

For instance:

----------------------------------------------------

Command: id00/metadata/mgr/CheckParameters

Duration: 143 msec

Output argument(s) :

----------------------------------------------------

[ERROR] 2 parameters are unknown by ICAT. Ingestion will fail.

[Parameter] myParameters

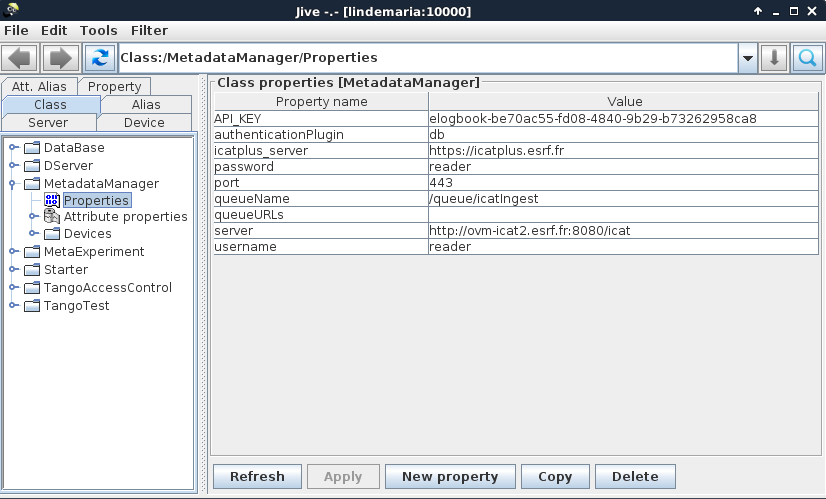

[Parameter] myLabelsIn order to configure the access from the MetadataManager to ICAT is necessary to set up some parameters in the class properties:

- AuthenticationPlugin: db

- username: reader

- password: reader

- port: 443

- server: icat.esrf.fr

ICAT+

MetadataManager can send notifications to ICAT+. For doing so, some parameters need to be added into the class properties:

OBJ_PROPERTY:API_KEY: elogbook-be70ac55-fd08-4840-9b29-b73262958ca8

OBJ_PROPERTY:icatplus_server: "http://lindemaria:8000"Sum Up

You need to define as class parameters:

Non-Debian6

API_KEY: elogbook-be70ac55-fd08-4840-9b29-b73262958ca8

authenticationPlugin: "db"

icatplus_server: "https://icatplus.esrf.fr"

password: "reader"

port: "443"

queueName: "/queue/icatIngest"

queueURLs: bcu-mq-01.esrf.fr:61613,\

bcu-mq-02.esrf.fr:61613

server: "icat.esrf.fr"

username: "reader"

For Debian6

API_KEY: elogbook-be70ac55-fd08-4840-9b29-b73262958ca8

authenticationPlugin: "db"

icatplus_server: "https://icatplus.esrf.fr:8443"

password: "reader"

port: "443"

queueName: "/queue/icatIngest"

queueURLs: bcu-mq-01.esrf.fr:61613,\

bcu-mq-02.esrf.fr:61613

server: "icat.esrf.fr"

username: "reader"

Logging with Graylog

Graylog configuration is hardcoded in MetadataManager class. To be moved to a configuration file.

Build

Packaging

The Conda packages are build using the branch tango-metadata from our gitlab project git@gitlab.esrf.fr:bliss/conda-recipes.git.

Deploy

1.- ssh -X blissdb8 as yourself

lindemaria:~ % ssh -X demariaa@targetcompter2.- Create suitable conda environment

targetcomputer:~ % conda create -n metadata tango-metadata which will install the package and its dependencies within that new conda environment called metadata.

Elogbook

Requirements

Go the the requirements section where dependencies are explained: Requirements

Usage

Python

Example:

#!/usr/bin/env python

"""A simple client for MetadataManager and MetaExperiment

"""

import time

import os

import sys

import logging

import PyTango.client

from time import gmtime, strftime

class MetadataManagerClient(object):

metadataManager = None

metaExperiment = None

"""

A client for the MetadataManager and MetaExperiment tango Devices

Attributes:

name: name of the tango device. Example: 'id21/metadata/ingest'

"""

def __init__(self, metadataManagerName, metaExperimentName):

"""

Return a MetadataManagerClient object whose metadataManagerName is *metadataManagerName*

and metaExperimentName is *metaExperimentName*

"""

self.proposal = None

if metadataManagerName:

self.metadataManagerName = metadataManagerName

if metaExperimentName:

self.metaExperimentName = metaExperimentName

print('MetadataManager: %s' % metadataManagerName)

print('MetaExperiment: %s' % metaExperimentName)

""" Tango Devices instances """

try:

MetadataManagerClient.metadataManager = PyTango.client.Device(self.metadataManagerName)

MetadataManagerClient.metaExperiment = PyTango.client.Device(self.metaExperimentName)

except:

print "Unexpected error:", sys.exc_info()[0]

raise

''' Set proposal should be done before stting the data root '''

def setProposal(self, proposal):

try:

MetadataManagerClient.metaExperiment.proposal = proposal

self.proposal = proposal

except:

print "Unexpected error:", sys.exc_info()[0]

raise

def notifyInfo(self, message):

MetadataManagerClient.metadataManager.notifyInfo(message)

def notifyError(self, message):

MetadataManagerClient.metadataManager.notifyError(message)

def notifyDebug(self, message):

MetadataManagerClient.metadataManager.notifyDebug(message)

if __name__ == '__main__':

metadataManagerName = 'id00/metadata/mgr'

metaExperimentName = 'id00/metadata/exp'

client = MetadataManagerClient(metadataManagerName, metaExperimentName)

client.setProposal('ID000000')

client.notifyInfo("This is a info")

client.notifyError("This is a error")

client.notifyDebug("This is debug")

Upload Base64 image

In order to upload a base64 image a new method has been implemented. Example:

MetadataManagerInstance.uploadBase64("data:image/png;base64,iVBORw0KGgoAAAANSUhEUgAAAAEAAAABCAQAAAC1HAwCAAAAC0lEQVQYV2NgYAAAAAMAAWgmWQ0AAAAASUVORK5CYII=")Simulation

MetaExperiment and MetadataManager can be run in Simulation mode. It means that it will not try to access to the ActiveMQ server.

In order to activate the simulation mode someone just need to empty the queueURLs parameter